Infrared Imaging Comes of Age

Smaller size, greater flexibility and faster results give imaging technology greater appeal in facilities.

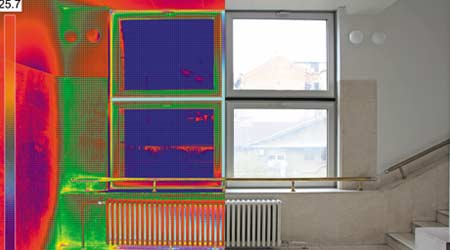

Maintenance and engineering managers and their staffs have powerful new diagnostic tools at their disposal thanks to the development of a new generation of infrared imaging systems. While infrared imaging has been in use for several decades, this generation is different because of its ease of use and its affordability.

Unlike past systems that required cryogenic cooling, today’s systems operate at ambient temperatures, are roughly the size of a standard SLR digital camera, and can provide immediate results. This flexibility allows managers to use the technology in an expanding array of applications.

Depending on system features, costs can range from around $500 to well over $10,000. So it is important for managers looking to make use of the technology to understand the imaging requirements for their specific application before selecting a system. Too little system performance, and the results will diminish. Too extravagant a system wastes money and might introduce unnecessarily complex operation.

System features

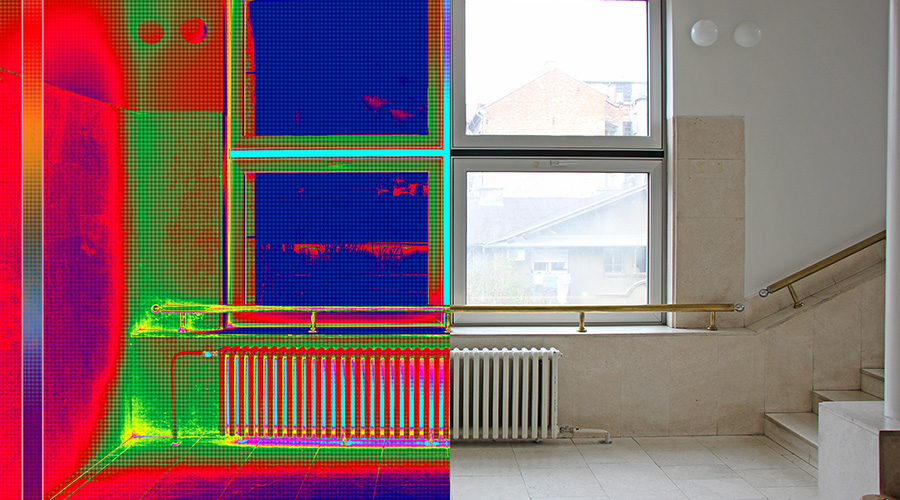

A number of key system features directly contribute to the quality of the image produced and its cost. In general, the higher the system’s image quality, the more it costs. Two of the most important features that impact quality and cost are detector resolution and thermal sensitivity.

The number of pixels determines detector resolution. The most common resolutions include 160 by 120 pixels, 320 by 240 pixels, and 640 by 480 pixels. The higher the resolution, the clearer the image. Higher resolutions also mean better measurement accuracy and that the camera can be further from the target and still produce the same detail as a lower resolution camera.

Thermal sensitivity rates the smallest temperature difference a camera can detect. It is an important factor in image quality. Higher thermal sensitivities produce higher quality images, while lower thermal sensitivity tends to result in a noisy or grainy image. The average thermal sensitivity for infrared imaging cameras is about 0.4 degrees. While that might not seem like a large difference, it does have a great impact on image quality.

Another factor to consider is the system’s temperature range — the minimum and the maximum temperature a system can measure. For most cameras, the temperature range goes from slightly below 0 degrees to more than 2,000 degrees. Not all cameras cover that range, so it is important that managers select a system with a temperature range that matches the requirements of their applications.

Yet another factor to consider when looking at systems is the camera’s field of view. Measured in degrees horizontally and vertically, field of view indicates the area a camera can read. A wider field of view allows the user to see a larger area. But wider is not always better. Since the number of pixels in the camera remains constant, the camera might display a greater area, but the camera’s ability to measure the temperature in smaller areas is reduced.

Related Topics: